PARADISEC contains, at an informed guess, in the tens-of-thousands of pages of handwritten notes relating to the languages and cultures of the Pacific region. Many of those pages pertain directly to audio-visual media also housed in the archive, such as audio or video files, and the pages might include transcriptions, translations, explanations, notes, etc, of those media files. The pages represent a potential trove of added value to the media recordings they relate to, or in and of themselves. With new technologies in handwritten text recognition (HTR) and Optical Character Recognition (OCR) fast progressing, we have an opportunity to harness the value of these pages.

To this end, PARADISEC has engaged with a range of technical approaches to converting handwritten pages in the archive to machine-readable text formats. I have worked closely on this with two PARADISEC collections, and here I am going to share my observations from the process, including the digital tools I have used.

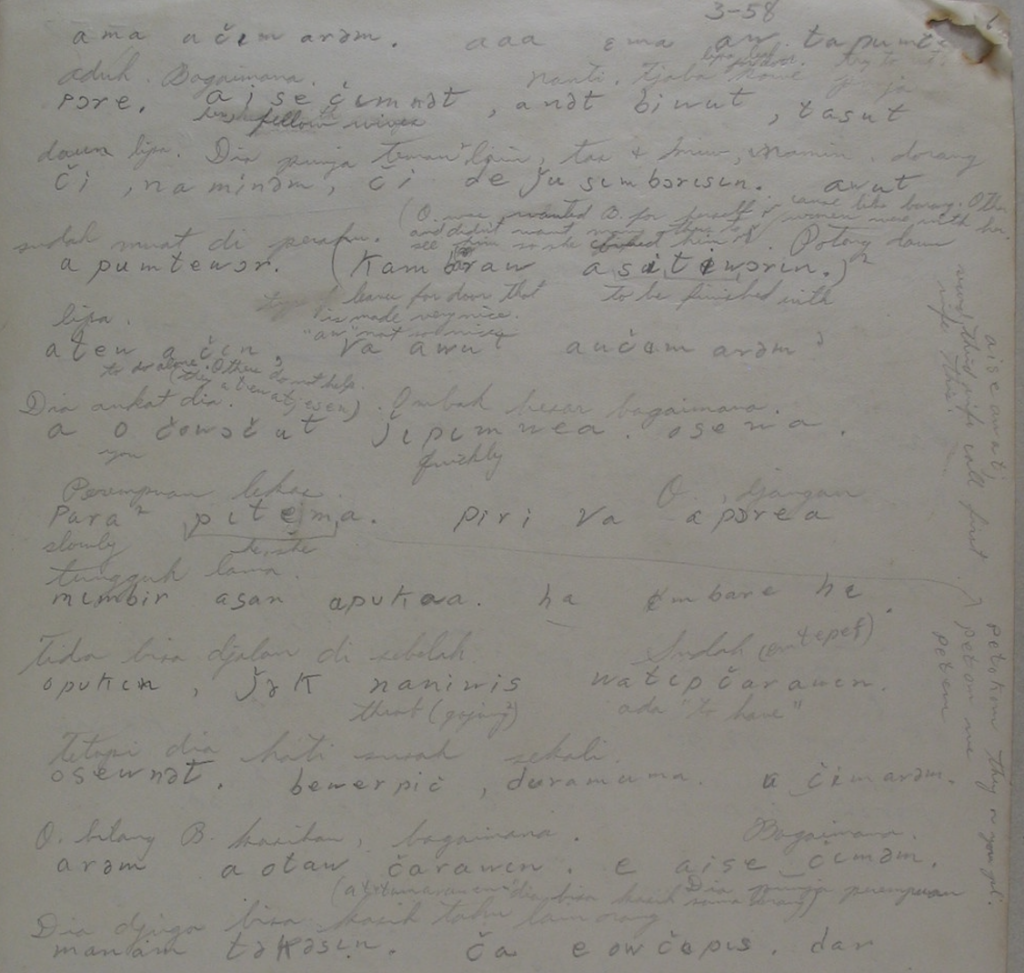

The first collection I worked on, CR1, had pages with complicated, often messy layout elements – inter-linearised translations, interjected comments, words crossed-out and over-written, sentences or words written at various angles across the page, different handwriting styles (i.e. more than one person’s handwriting) on a single page, plus unconventional characters and accents (Image below). I used the Nyingarn Platform Workspace, which makes use of Amazon’s Textract, for a primary HTR/OCR pass of the images. It was ok, sometimes, but usually the OCR output required such a large amount of manual correction that I considered it would probably be quicker to not use the OCR tool at all, and to just type it out from scratch. To decide if that was the case, I did some tests, comparing the time it took me to do it each way (from scratch, or starting with the OCR output) for, say, 5 pages. The method of using the OCR base + manual correction came out marginally quicker, so I did continue to use that method whilst I finished up my work on that collection.

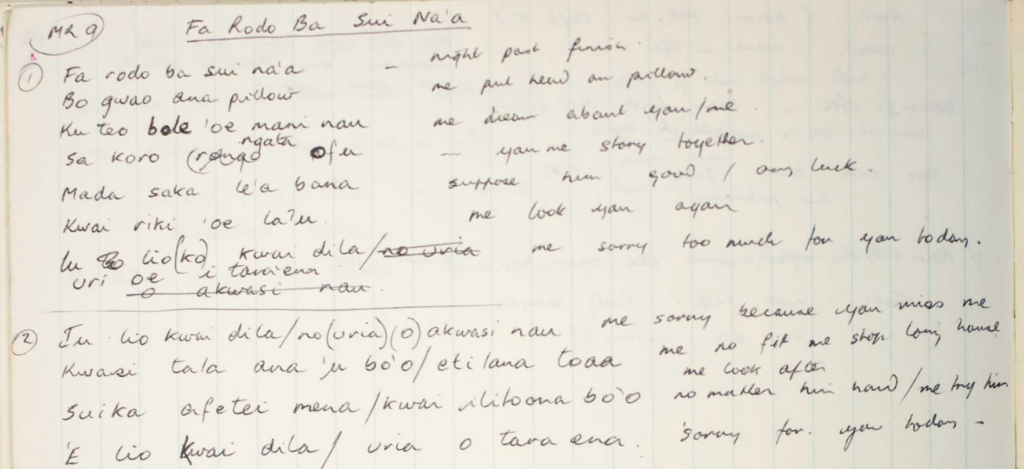

A few months later, I was again doing a similar task. The pages this time, from Ian Frazer’s IF01 collection, were generally neater than the CR1 pages I had earlier worked on. The layout was neater, with minimal unusual characters and accents, and there were around seven thousand pages with many being written in the same hand (Image below). I had since come across an HTR/OCR tool called Transkribus, which has been developed in Europe since around 2019 by READ-COOP. Transkribus offers the option of choosing the text-recognition model used to analyse your data, so you can select, for example, a model trained on specific language/s or text styles that match your data. Alternatively, they have created some generic models (which they call ‘Super Models’) that are trained on a large amount of different languages and text styles, and are therefore powerful and accurate over a wide-range of data. Finally, Transkribus allows you to train your own text-recognition model, based an a specified training set of data. They suggest this can be more effective for more “well-defined” data sets. Given the large number of IF01 pages all in the same handwriting, and of the same small number of related languages, I thought this could be a good opportunity to try creating such a custom-trained model in Transkribus, in the hope of more efficiently transposing the IF01 pages to machine-readable form.

I started by OCR’ing and then manually correcting 25 pages in Transkribus, which is their suggestion of a minimum starting point for training your own model. After manually correcting, I assigned those pages the status of ‘Ground Truth’ in Transkribus, and then I used those 25 pages to train my custom text-recognition model. Once my custom-model had been created (this job took some 30-60 minutes to complete on the Transkribus servers), I then analysed 10 previously-unanalysed pages through the custom-trained model. For comparison, I analysed the same 10 pages through one of Trankribus’ Super Models (Titan I), and also through Amazon Textract via the Nyingarn Workspace.

The results of my little experiment were, essentially, a win for big data over customisation. Despite the fact that the custom model was trained specifically on this handwriting style and language, Amazon Textract and the Transkribus Titan I model performed much better in accurately reading the handwritten text than the custom-trained model. Twenty-five pages is perhaps not a large enough initial training set, and I’m sure the results could improve with more training input. However, the results from the powerful generic tools (Amazon Textract and Transkribus Titan I model) were so markedly superior, and no doubt will continue to improve with advances in the technology, that training a custom model does seem like, for most use-cases, it might be a superfluous effort.

Comparing Amazon Textract (AT) and Transkribus Titan I model (TT), each has pros and cons. Based on their performance with my particular data set, they were about similarly accurate. AT analysed some pages a bit more accurately, whilst on other pages TT was slightly superior. On cost: Both platforms have a free trial period, and beyond that, on the face of it, AT is cheaper. This makes sense, given the economies of scale Amazon works with. However, it becomes a more complicated equation once you get into specifics. For example, AT’s basic HTR/OCR is very cheap ($1.50/1000 pages, for their ‘Detect Document Text API’), but then if you want to start adding other APIs to analyse tables, forms, or layout, these are each in the $4-50/1000 pages range. Layout analysis, for example, is something that Transkribus offers for free, and that was something that I found really useful, being able to transparently see how the layout of the text is being analysed.

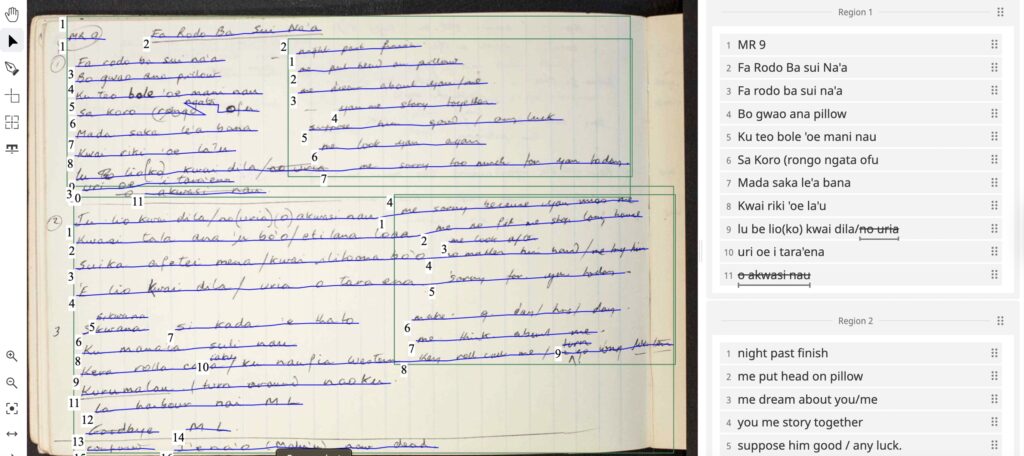

This was well illustrated with the type of data I was analysing, with the pages often being divided up loosely into sections, such as lines of a song in language on one side of the page, a line-by-line English translation on the opposite side, and then a section at the bottom of the page containing general notes. These sections are obvious to the human eye, but because they are not explicitly marked (e.g. by lines or otherwise), the machine usually does not ‘see’ them. Transkribus has been progressively improving their user interface, and their new web-based interface allows for easy and intuitive editing of the layout elements, which is very handy to have available (Image below). I have not worked with AT’s corresponding layout analysis tool, for comparison, because it is not included in the Nyingarn Workspace OCR interface, which only includes the basic Detect Document Text API.

Using these fast-advancing OCR and HTR technologies to make the millions of handwritten pages in PARADISEC and similar archives machine readable will make a vast amount of data available for analysis, both by human and machine. This will markedly improve the findability, accessibility, interoperability and reusability of the data, in line with the FAIR principles. But, of course, this must be approached with awareness towards the complementary CARE principles, which focus on the importance of generating value from Indigenous data “in ways that are grounded in Indigenous worldviews, and to advance Indigenous innovation and self-determination.” (https://www.ldaca.edu.au/about/principles/).

In conclusion, the journey to digitize handwritten archives from collections like PARADISEC using OCR and HTR technologies reveals both promise and challenges. While custom-trained models showed potential for accuracy in specific contexts, the broader capabilities of platforms like Amazon Textract and Transkribus’s Titan I model demonstrated superior performance, leveraging extensive datasets for comprehensive text recognition. The evolution of these technologies not only enhances accessibility and discoverability of invaluable cultural data but also underscores the importance of ethical considerations, aligning with principles that respect and elevate Indigenous perspectives. As these tools continue to evolve, they hold the key to preserving and sharing diverse cultural narratives, ensuring they are both accessible and beneficial to communities and researchers alike.

Follow

Follow